Friday, January 30, 2009

(Update) Wall Street Cloud Interoperability Forum - NYC April 2nd

At this point we're still looking for a few more sponsors, but have confirmation from Cisco, Intel, Sun, France Telecom / Orange, ThompsonReuters, Enomaly, Zeronines, & SOASTA. If you're interested sponsoring, please get in touch.

A formal invitation will be sent out later next week. Given the amount of interest we have for this event, unless you're a sponsor, we will limit attendance to no more then two people per relevant organization. We are also limiting attendance to either financial institutions or companies / individuals who have an active involvement in cloud computing or the advancement of cloud interoperability / standards.

Thursday, January 29, 2009

Google Releases Hybrid OpenID OAuth Extension

For consumer developers, OAuth is a method to publish and interact with protected personal data. For service provider developers, OAuth gives users access to their data while protecting their account credentials. In other words, OAuth allows a user to grant access to their information on one site (the Service Provider), to another site (called Consumer), without sharing all of his or her identity.

The new Google sponsored OpenID OAuth Extension describes how to make the OpenID Authentication and OAuth Core specifications work well together. In its current form, it addresses the use case where the OpenID Provider and OAuth Service Provider are the same service. To provide good user experience, it is important to present, to the user, a combined authentication and authorization screen for the two protocols.

This extension describes how to embed an OAuth approval request into an OpenID authentication request to permit combined user approval. For security reasons, the OAuth access token is not returned in the OpenID authentication response. Instead a mechanism to obtain the access token is provided.

If you're interested in looking at some code, check out the working sample using the Google Data PHP client library. The source code is available here.

Enomaly ECP 2.2 Released

New Additions and improvements include;

ECP Core

- The ECP installer will now automatically generate a uuid for the host. Also, the installer will now synchronize with the local package repository if one exists. Several Xen fixes are now carried out by the installer that allow ECP to better manage Xen machines. The exception handling has also been drastically improved in the installation process.

- The core ECP data module has many new features as well as many bug fixes. Several subtle but detrimental object retrieval issues have been resolved. This alone fixed several issues that were thought to be GUI related in previous ECP versions. The new features include added flexibility to existing querying components and newer, higher level, components have been implemented. These newer components build on the existing components and will provide faster querying in ECP.

- The configuration system has gone through a major improvement. It is now much easier and efficient to both retrieve and store configuration data. This affects nearly any ECP component that requires configuration values.

- The extension module API now allows extension modules to register static directories as well as javascript. Some of the core extension modules are already taking advantage of this new offered capability. This helps balance the distribution of responsibilities and increases the separation of concerns among ECP components.

GUI

- There have been many template improvements that promote cross-browser compatibility. Many superfluous HTML elements have been removed and others now better conform to the HTML standard.

- A new jQuery dialog widget has been implemented. This widget is much more robust and visually appealing than the dialog used in previous ECP versions.

- General javascript enhancements will give the client a nice performance boost and improve on the overall client experience.

Testing

- With an emphasis on improving the ECP RESTful API design in this release, the requirement for automatically invoking various ECP resources came about. Included in this release is a new client testing facility that can run tests on any ECP installation. Although the tests are limited, they continue to be expanded with each new ECP release.

vmfeed (extension module)

- A big effort has been undertaken in analyzing the deficiencies with the previous versions of the vmfeed extension module in order to drastically improve its' design for this release. One of the major problems was the lack of consistency in the RESTful API provided by vmfeed. Some of the resource names within the API were ambiguous at best while some important resources were missing entirely. There has been a big improvement in both areas for this release.

- Another problem was the actual design of the code that actually drives the extension module. Much of the code in vmfeed has been re-factored in order to produce a coherent unit of functionality. As always, there is still room for improvement which will come much more easily in future iterations as a result of these changes.

machinecontrol (extension module)

- In previous ECP versions, when operating in clustered mode, removal of remote hosts was not possible. This has been corrected in this release.

- The machinecontrol extension module will now take advantage of the new ECP configuration functionality.

- When deleting machines, they are now actually undefined by libvirt.

static_networks (extension module)

- The static_networks extension module will now use the newer ECP core functionality in determining the method of the HTTP request.

- Refactoring has taken place to remove the static_networks javascript from the core and into the actual extension module package. This improves the design of both the static_networks extension module while reducing the complexity of the ECP core.

- The static_networks extension module will now take advantage of the new ECP configuration functionality.

transactioncontrol (extension module)

- The transactioncontrol extension module will now use the newer ECP core functionality in determining the method of the HTTP request.

clustercontrol (extension module)

- Major improvement in the RESTful API design. Some invalid resources were removed while others were improved upon.

- The clustercontrol extension module will now use the newer ECP core functionality in determining the method of the HTTP request.

- The clustercontrol extension module will now take advantage of the new ECP configuration functionality.

Definition: Cloud Lock-in

Definition: Cloud Semantics

Wednesday, January 28, 2009

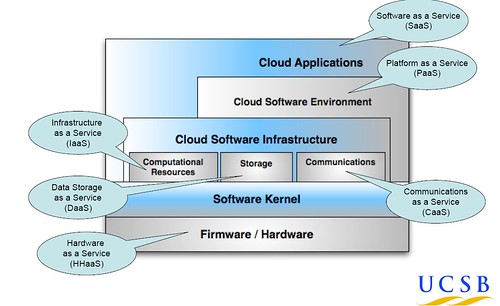

A Unified Ontology for Cloud Computing

The paper lays out a proposed Cloud Computing Ontology: depicted as five layers (Cloud Application Layer, Cloud Software Environment Layer, Cloud Software Infrastructure Layer, Software Kernel, Hardware and Firmware), with three constituents to the cloud infrastructure layer (Computational Resources, Data Storage, Communication). The layered approach represents the inter-dependency and composability between the different layers in the cloud.

The paper proposes "an ontology of this area which demonstrates a dissection of the cloud into five main layers, and illustrates their interrelations as well as their inter-dependency on preceding technologies. Better comprehension of the technology would enable the community to design more efficient portals and gateways for the cloud, and facilitate the adoption of this novel computing approach to computing."

The paper goes on to describe "The current state of the art in cloud computing research lacks the understanding of the classification of the cloud systems, their correlation and inter-dependency. In turn, this obscurity is hindering the advancement of this research field. As a result, the introduction of an organization of the cloud computing knowledge domain, its components and their relations – i.e. an ontology – is necessary to help the research community achieve a better understanding of this novel technology.

Interesting read.

http://www.cs.ucsb.edu/~lyouseff/CCOntology/CloudOntology.pdf

Tuesday, January 27, 2009

The untold (back) story of Amazon EC2

Black outlines a backstory which takes place in early 2004, "i was told jeff was interested in the virtual server as a service idea and asked for a more detailed write up of it. this i did, also incorporating a couple of requests jeff had, like the idea of a "universe" of virtuals, which i translated into network-speak as a distributed firewall to isolate groups of servers."

He also points out that EC2 wasn't a production environment used to host Amazon.com but indeed a R&D project. He goes on to say "in the amazon style of "starting from the customer and working backwards", we produced a "press release" and a FAQ to further detail the how and why of what would become EC2. at this point attention turned from these paper pushing exercises to specifics of getting it built."

Read the full post here

The Case Against Commodity Cloud Exchanges

I'm happy to announce that we've launched SpotCloud, the first Cloud Capacity Clearinghouse and Marketplace. Check it out at www.spotcloud.com

--

The concept of a commodity cloud exchange is something that I've been talking about for several years. Notably Sun Microsystems also proposed it back in 2005. Recently a new start-up spun out out of Deutsche Telekom called Zimory is attempting to use this as the nexus of their enterprise focused hybrid cloud platform. The company describes itself as the first 'global marketplace for cloud resources' to enable organizations to buy or sell extra computing capacity.

For those of you unfamiliar with the concept of a cloud exchange, the concept is to provide a central financially focused exchange where companies are able to trade standardized cloud capacity in the form of a futures contract; that is, a contract to buy specific quantities of a compute / storage / bandwidth capacity in the form of a commodity at a specified price with delivery set at a specified time in the future. The contract details what cloud asset is to be bought or sold, and how, when, where and in what quantity it is to be delivered, similar to a bandwidth exchange or clearing house. The exchange may be public or private akin to private exchanges / ECNs on the stock market, where membership is by invitation only

As I dug a little deeper into the Zimory's web interface I noticed that Zimory is actually not really a marketplace so much as a multi-cloud management platform. The platform does little to address security, audibility, accountability, or trading/futures contracts. My first question is why should I trust their cloud providers and how do I know they're secure?

Another problem with the platform is their approach to capacity access. It appears that you are forced to use their platform, a platform that has no API or web services that I could see. Also their approach to a SLA is not very obvious, they broadly describe three levels, Bronze, Silver and Gold with no insight into what these levels actually represent. We are too just take them at their word.

Upon closer examination of the Zimory platform it appears to be nothing more than an open source hybrid cloud computing platform with an "ebay" marketing spin. So lets for a moment assume that a Commodity Cloud Exchange is a service that businesses actually want. (I'm not convinced they do) If this is the case, is a random start-up such as Zimory really in a position to offer such a service? And if so, should this exchange look like ebay or should it look more like a traditional commodities exchange? My opinion is the latter. What worries me about such a cloud exchange is the first thought that comes to mind is Enron, who attempted a similar bandwidth focused offering in the late 90's.

If we truly want to enable a cloud computing exchange / marketplace, maybe a better choice would be to build upon an existing exchange platform with a proven history. A platform with an existing level of trust, governance as well as compliance such as the Chicago Mercantile Exchange's Globex electronic trading platform or even the NasDaq.

Creating a cloud exchange has less to do with the technology and more to do with the concept of trust and accountability. If I'm going to buy XX amount of regional capacity for my Christmas rush I want to rest assured that the capacity will be actually available. And more importantly at a quality of service & location that I've agreed upon. I also need to be assured that the exchange is financially stable and will remain in business for the foreseeable future . All of which Zimory doesn't offer.

Trust and security aside, To make Zimory attractive, they need to enable a marketplace that allows its users to buy additional capacity based on economic / costing factors that matter. For example being able to define a daily budget for your app, similar to the way AdWords spending works. There needs to be a fine-grained control over this budget so you can apply it across CPU, network bandwidth, disk storage, location with a focus on future requirements (futures). I should be able to trade / swap any unused capacity as easily as I originally bought it. There needs to be provider quota system that allows for the assurance that a certain amount of cloud capacity is always available to the "exchange" with a priority level. There should be multiple types of trading contracts as well as an indepth audit trail with a clear level of transparency within the entire trading process.

At the end of the day, I'm not convinced we're ready for "standardized" cloud exchanges. The cloud computing industry is still emerging, there are no agreed upon standards for how we as an industry can collaborate as partners yet alone trade capacity. In a lot of ways I feel Zimory is putting the cart before the horse and is probably 5-10 years too early.

Sunday, January 25, 2009

Cloud Attack: Economic Denial of Sustainability (EDoS)

The general idea of an EDoS attack is to unitilize cloud resources to disable the economic drivers of using cloud computing infrastructure services. In an EDoS attack the goal is to make the cloud cost model unsustainable and therefore making it no longer viable for a company to affordability use or pay for their cloud based infrastructure.

In Hoff's post he says "Specifically, this usage-based model potentially enables $evil_person who knows that a service is cloud-based to manipulate service usage billing in orders of magnitude that could be disguised easily as legitimate use of the service but drive costs to unmanageable levels. "

Adam O'Donnell, the Director of Emerging Technologies at Cloudmark, points out that "The billing models that underlie cloud services may not be mature enough to properly account for an EDoS like attack."

What this means is that just using the cloud for the purposes of easily scaling your environment may soon not be enough. Traditional scaling and performance planning may quickly be giving way to cost based scaling methodologies. These new cost centric approaches to scaling cloud infrastructure will look at more then just monitoring the superficial aspects of your applications load time but instead focus on how much it's actually costing you.

The ability to adjust based on realtime economic factors may soon play an equally critical role in a company's decision to use "the cloud" or potentially continuing to use the it. This is particularly true of infrastructure as a service offerings such as Amazon or Gogrid, where the cost are passed directly onto the users of the service in a pay per use fashion.

In the platform-as-a-service world, this may not be as big of an issue because of the economies of scale that companies like Google and Microsoft bring to bear. But for the smaller guys or DIY clouds, this could pose a major problem.

The classic example Amazon and others use is that of Animoto, but what if 50% of Animoto's traffic was purely that of an upset customer looking to break the bank? Never under estimate the power of a upset customer or ex-employee's vendetta. Worse yet, what if that irate customer used the very cloud as the method to create a denial of sustainability attack? It's become easier then ever to acquire fake credit card numbers.

For a while it seems the cloud computing was advancing more quickly then criminals, but this is probably going to be a short lived trend, a trend which may have already passed. In the very near future the next generation of cloud based capacity planning and scaling may start to focus more on building cost based strategies along with the load and user experience. A strategy capable of being able to determine the optimal cost while also providing comparisons along with everything else you need to be competitive.

Saturday, January 24, 2009

Technological Universalism & The Unification of IT

There have been some interesting points of view on this topic recently in particular was Lydia Leong at Gartner's insight in which she said "He who controls the entire enchilada — the management platform — is king of the data center. There will be personnel who are empire-builders, seeking to use platform control to assert dominance over more turf." In a nut shell this is the opportunity Cisco is attempting to go after.

Backing this up are the remarks Padmasree Warrior made in her blog post announcing this broad new vision for the data center, in it she said "IT architectures are changing – becoming increasingly distributed, utilizing more open standards and striving for automation. IT has traditionally been very good at automating everything but IT!"

Cisco's move into server hardware makes a lot sense for the traditionally "networking" focused company. A company that derives most of it's revenue from providing static "boxes" that sit in your data center doing one thing and one thing only. But the trend in IT recently has been the move away from boxed appliances to that of virtualizing everything. Whether networking gear or storage, everything is becoming a virtual machine (VM). The requirement to buy expensive "static" networking gear is quickly becoming a relic of the past. The infrastructure of the future will utilize a series of self assembling virtual applications components that are forever adapting and changing to "current" conditions.

What VM's provide are a kind of lego building block that can be easily managed and adjusted with little or no effort. What is acting as an application server today may be a network switch or load balancer tomorrow. What's been missing from this vision is a unified interface to accomplish all this. This is the new reality facing niche hardware vendors in the very near future.

What virtualization & cloud computing has done to the IT industry is open its eyes to the potential for the unification of application & infrastructure stacks. These two traditionally separate components are now starting to morph into each other. In the near term it may start to become very difficult to see where your application ends your infrastructure begins. Service such as Amazon EC2 are just the tip of the iceberg, a place where you can test the waters. The true opportunity will be in the wider adoption of a unified IT stack, one that encompasses all aspects of IT, a kind of technological universalism.

PCI Security Standards Council to Form Virtualization / Cloud SIG

In Hoff's post, he outlined an email from Troy Leach, technical director of the PCI Security Standards Council in which he said,

" A SIG for virtualization is coming this year but we don't have any firm dates or objectives as of yet. Only those 500-600 companies (which include Vmware, Microsoft, Dell, etc) that are participating organizations or the 1,800+ security assessors can contribute. As you can imagine with those numbers, we already receive thousands of pages of feedback and are obligated to read all comments and suggestions."

If this is true, it could have some fairly broad implications for the broader cloud computing community. As a number of people have pointed out on the CCIF mailing list, security is one of the leading concerns when dealing with cloud computing. I am looking forward to seeing what emerges out their activities.

Here is the original post

Thursday, January 22, 2009

Cisco Introduces Unified Computing

According to a recent post by Cisco CTO Padmasree Warrior says Cisco "views periods of economic uncertainty as the perfect time to challenge the status quo and evolve our business to deliver customer and shareholder value. Cisco’s success has always been driven by investments in market adjacencies during times that may cause other companies to blink."

Guess I'm not the only one promoting a unified cloud interface. Cisco is calling there's “Unified Computing”. In the post they describe Unified Computing as the advancement toward the next generation data center that links all resources together in a common architecture to reduce the barrier to entry for data center virtualization. In other words, the compute and storage platform is architecturally “unified” with the network and the virtualization platform. Unified Computing eliminates this manual integration in favor of an integrated architecture and breaks down the silos between compute, virtualization, and connect.

Nice work, call me and we'll do a unified lunch.

Forget Cloud Standards, First Think Cloud Consensus

Let's revisit what interoperability actually means, the wikipedia defines it as "a property referring to the ability of diverse systems and organizations to work together (inter-operate)" and a standard as "a document that establishes uniform engineering or technical specifications, criteria, methods, processes, or practices." You can't have a standard without organizations working together.

In twitter dialog with Simon Wardley (Services Manager for Canonical / Ubuntu Linux) yesterday he stated his "view that standards will be chosen by the market not committee, however a body which promotes potential standards is viable." I couldn't agree more.

The last thing we need are some new ridged set of cloud standards that are obsolete before the digital ink is even dry. How can we hope to create "standards" before we even fully understand where the true cloud computing opportunities are. What I am advocating for cloud interoperability (in the short term) is an industry consensus or a place (formal or informal) where cloud computing stakeholders are able to come together to collaborate and foster a common vision. This was a motivating factor when we create the first CloudCamp's and interoperability events last year. Maybe this takes the form of an industry trade group or alliance or something else I don't know. What I do know is the more we cooperate as well as collaborate, the better off we'll be as an industry.

One option may be to create some common definitions (taxonomy). This could be the first step in at least defining what cloud computing represents, not as a whole but in the sum of its parts. Trying to define cloud computing in its entirety would be like trying to define the Internet which is in itself an analogy. The great thing about the Internet is it means different things to different people.

As Wardley also pointed out "The best first thing the CCIF can do is come up with a standard taxonomy for the cloud which sticks."

Tuesday, January 20, 2009

Cloud API Propagation and the Race to Zero (Cloud Interoperability)

When thinking about what Cloud Interoperability truly means, for me it's all about the API. There is much talk of ID / Authentication, storage, networking, system management and so forth. The one problem that all these various aspects share is that point of programmatic access / control. What seems to have happened over the last year is what I've started calling "cloud API propagation". Every new cloud service provider puts there own spin on how a user or cloud application interacts with "their cloud".

The problem I see is each cloud appears to be a silo upon itself. By each new cloud providing their own new API, which effectively means that your only real option is to go with the largest player, not the best. API propagation is killing the cloud ecosystem by limiting cloud choice and portability. In order to use Amazon, I am being forced to choose their way of cloud interaction / control over any others including the ability to use my existing data center resources seamlessly. One of my driving factors toward the creation of a unified cloud interface is not only to enable portability (which is nice), but enabling singularity between existing "legacy" environments into the cloud and back again. From what I see of those who are building their entire infrastructure in the cloud, if given the option to move to another cloud provider, chances are they probably wouldn't. Just because you give someone the ability to leave doesn't mean they will.

Benson Schliesser from Savvis raises a very good point in a recent twitter dialog. He says I'm pro-API. But it may also accelerate commoditization. Are prices exposed via the API? Does that make it a race to zero? He goes on to say "that means there will be niche players and everybody else is just LCD. Enabled by an app that finds lowest price via API."

So the question is who does cloud interoperability really benefit? I would say legacy businesses who have existing data centers as well emerging cloud providers who are looking to compete not based on a unique API but rather on other unique service components they offer.

Sure, there will always be the value focused customers, who choose the lowest cost option. But there will also be cloud consumers who are looking for specific answers to their particular problems. Those are where the true opportunities are.

Being a cloud provider shouldn't be about your API. It should be about the applications they enable. I'm going choose to use the Savvis or GoGrid or Amazon cloud because they provide better Support, SLA's, QoS, geographic capabilities or possibly something I've never even imagined. Those are the ways you can differentiate your cloud. Saying "our API is better" is a poor marketing strategy. I believe saying your cloud is compatible or can work with what you already have is a better one.

GoGrid Open Sources their API

Back to the API, The license allows for the following:

- Share, distribute, display and perform the work

- Make derivative works

The GoGrid cloudcenter API re-use must, however, fall under the following Share Alike licensing conditions:

- There must be full attribution to GoGrid, author and licensor

- There is no implied endorsement by GoGrid of any works derived from the API usage or rework

- After any transformation, alteration or building upon this work, any distribution must be under the same, a similar or a compatible license

- You must make it clear to others about the terms of this license. The best way to do this is by linking to the GoGrid Wiki API page (link below)

- Any of the conditions mentioned previously can be waived with permission from GoGrid

Details on the GoGrid cloudcenter OpenSpec API license can be found within the GoGrid site and is specific to the API only. All content provided on the Wiki in the API “namespace” is covered by this Share Alike license, specifically under this URL: http://wiki.gogrid.com/wiki/index.php/API. Note however, this license applies only to content provided within the namespace plus any pages constrained by the URL plus a colon (”:”). For example:

INCLUDED under the license:

- http://wiki.gogrid.com/wiki/index.php/API:Anatomy_of_a_GoGrid_API_Call

- http://wiki.gogrid.com/wiki/index.php/API:Common_API_Call_Patterns

- http://wiki.gogrid.com/wiki/index.php/API:PHP_API_Developer_Home

NOT INCLUDED under the license:

London CloudCamp #3 (Mar 12, 2009)

You are invited to the following event:

London CloudCamp #3

Date:

Thursday, March 12, 2009 from 6:45 PM - 10:00 PM (GMT)

----------------------------------

Following the great success of the first two London CloudCamps, and various others around the globe, this time CloudCamp is joining up with QCon (http://qconlondon.com) and taking advantage of the prestigious Queen Elizabeth II Conference Center facilities.

CloudCamp is an unconference where early adopters of Cloud Computing technologies exchange ideas. With the rapid change occurring in the industry, we need a place we can meet to share our experiences, challenges and solutions. At CloudCamp, you are encouraged you to share your thoughts in several open discussions, as we strive for the advancement of Cloud Computing. End users, IT professionals and vendors are all encouraged to participate

The London format CloudCamp will start with 8 five minute "lightning" talks, followed by breakout unconference discussions. Finally you get the chance to do more networking over beer and pizza in the Benjamin Britten Lounge over looking the Abbey. In response to feedback requesting more breakout time in separate rooms, we have arranged three rooms from 20h00 to 21h00 for the unconference discussions.

This is the community's unconference we want you to determine the content. Please tell us what you would like to hear about, what you would like debated, if you want to give a talk or lead a discussion. Please email me your thoughts using the CohesiveFT link [top right].

Agenda

18h15 Registration

19h00 Lightning Talks - prompt start please!

20h00 Unconference Discussions

21h00 Networking - Beer and Pizza

22h00 Close

Content

As the content and topics firm up they will be posted on www.cloudcamp.com/london

Check out www.cloudcamp.com/london2 for details of the last London event.

Sponsors

CloudCamp would not be possible with out the generous support of it's sponsors;

CohesiveFT, SkillsMatter, Rightscale, Provide, AServer, Flexiscale, Cloudsoft, Microsoft, Zeus, and more to follow.....

Location:

Queen Elizabeth II Conference Centre

Broad Sanctuary Westminster

Westminster

London SW1P 3EE

United Kingdom

Monday, January 19, 2009

The Case for "Private Clouds"

Generally the debate goes like this; on one side you have those such as Andrew Conry-Murray and Eric Knorr who say (I'm paraphrasing) that the "Cloud" is an offsite infrastructure provided as a service. Or those like Jay Fry and James Urquhart who say "Internet has Intranet. Cloud Computing has Private Clouds. Similar disruption, localized scale."

As for me, I suppose it depends if you classify cloud computing as Internet based computing or the Internet as an infrastructure model. As I've said before I believe cloud computing is a metaphor for the Internet as a infrastructure model, therefore a private cloud is applying that model to your data center, whether it's closed to the outside world or not is secondary.

Introducing Cloud Hopping

With the latest virtual networking technology such as VMware's, vNetwork Distributed Switch, Cisco Nexus® 1000V and Virtual Square's Virtual Distributed Ethernet project we may for the first time have a level of virtual network adaptability that enables us to directly modify an underlying virtual switch and related network protocols across a series regional hybrid data center environments. In the coming years the opportunity will be in addressing the intersection of multiple data centers and cloud providers into that of a unified cloud infrastructure that focuses on providing an optimal application experience anywhere at anytime for any application.

With these recent advancements we now have a fundamentally new method for accurately determining geographic cloud resources for internet applications & services. These advancements open up a world of opportunity for new geo-centric application architectures and deployment strategies. An opportunity to change the fundamental way in which we look at distributed or wide area computing. I'm calling this "Cloud Hopping".

The general idea of "Cloud Hopping" is to place additional cloud capacity directly at the intermediary points between multiple network providers on a geographical basis (or hops). At which point we can start to look at location information for each Internet hop to determine an optimal geographic scaling architecture. For example, the server might look at the first five hops from the client to the server. If four of the five routers have addresses within the geographic area of interest, the server can conclude that the client is probably within the geographic area and request additional cloud resources for those particular users.

I feel the greater issue is that up until recently anyone who created a website or Internet application was forced to use the same generic network inter-connects (hops). The benefit to this was that generic Internet backbone providers offered a level playing field but this also meant those who were in a position to request or pay for additional resource had no options available to do so. Let me be the first to point out there are some worrisome consequences to this approach, mainly that of Network Neutrality or more specifically in the concept of data discrimination whereby network service providers guarantee quality of service but only for those who pay for it.

I think the underlying problem within the fight for network neutrality has been a completely misguided crusade by those who fundamentally don't understand that innovation needs a scalable revenue model as well as distributive technical capabilities in order to succeed. To that end there currently is no motivation for network backbone providers to offer enhanced capabilities because more then anything the technology to enable it hasn't been available. Cloud computing is for the first time making this vision a reality.

With the recent advancements in virtualized networking and cloud computing the barriers are finally disappearing. The main stumbling block seems to be that of public opinion toward the potential miss uses of such technology without looking at the far greater potential advancements that this type of technology could enable.

What a cloud hopping infrastructure may offer is the best of both worlds, a per-use / consumption based revenue model that provides both the incentive to enhance traditional feature-less network backbones with a revenue that allows backbone providers a way to grow their bottomline. A model that rewards those who take the lead in building a new generation of geo-centric network cloud capabilities. Anther way to think of this is the Internet itself becomes a potential point of scaling your compute resources, beyond just the typical bandwidth point of view. Think of it as Hop-by-Hop scaling.

It seems to me that network neutrality may not be about "forcing" new fees on Internet application providers but instead offering additional choices for those who choose to improve their users computing experience.

The Death of Beta

Great post over at Gigaom today on the subject of using the term "Beta". No I'm no talking about the short lived Betamax video format from the 80's, but the tagline du jour over the last few years. In the article Blake Snow says that the term "Beta" "is dissipated and confusing" and I couldn't agree more.

Great post over at Gigaom today on the subject of using the term "Beta". No I'm no talking about the short lived Betamax video format from the 80's, but the tagline du jour over the last few years. In the article Blake Snow says that the term "Beta" "is dissipated and confusing" and I couldn't agree more.First of all, before any of you blast me for being a hypocrite, I am the first to admit that we at Enomaly, like others, had used the term extensively over the last few years. More recently we decided to drop its use a move to a more traditional "code release" style which outlines a release candidate's number with an outline of its level of testing & Q/a.

I found this description of beta in the artlce very telling " In software speak, it means “mostly working, but still under test,” according to the Jargon File, the unofficial glossary of hacker slang. As the nerd dictionary so humorously puts it, “Beta releases are generally made to a group of lucky (or unlucky) customers” — either in-house (closed) or to the public (open)."

One thing the article misses is that "Beta" hasn't always been a marketing tagline. For those software developers in the crowd you may have heard of something called "The software release life cycle" The general idea is that software development is composed of different stages that describe the stability of a piece of software and the amount of development it requires before final release. Each major version of a product usually goes through a stage when new features are added, or the alpha stage; a stage when it is being actively debugged, or the beta stage; and finally a stage when all important bugs have been removed, or the stable stage. In it's earlier form Beta wasn't ment as a marketing ploy, yet more recently it has become just that, a kind of web2.0 gimic.

For us, Beta / Alpha / Pre Alpha, or whatever has become a method for us to describe our release candidates, early, late or in-between. As in any open source project, we have a wider list of users who request access to these early releases and help us for the most part find bugs. This is one of the best uses for a "beta" program. FREE Q/A.

For us, Beta / Alpha / Pre Alpha, or whatever has become a method for us to describe our release candidates, early, late or in-between. As in any open source project, we have a wider list of users who request access to these early releases and help us for the most part find bugs. This is one of the best uses for a "beta" program. FREE Q/A.The problem with a wider beta release is that most users don't draw a distinction between a beta or production ready release, the assumption is if you're making your application available, it's going to be fully tested and ready for production. On the flip side, most companies who use the term beta are in a sense saying exactly the opposite that, it's not ready but we'd like to you to try it anyway in an attempt to gain some market share or whatnot.

Amazon's use of Beta has been one of the best I've seen. Amazon's EC2 service was in beta for almost three years with numerous bugs and problems being spotted and potentially fixed by a large pool of beta tester. Amazon was able to gain a massive market share before removing the beta tagline late in 2008. They are no undoubtedly the biggest cloud computing infrastructure provider all thanks to their mastery of the "beta". Don't get me wrong, Google has also done a great job of using beta's, but they're already at an unfair advantage, so regardless of their beta tagline, gmail would probably have done just as well because of a broad Google user base.

So is beta dead? I doubt it, but hopefully for customer facing apps it is.

Upcoming 2009 CloudCamps

In David's post he says "If you haven't jumped into the water yet, what are you waiting for? At least educate yourself. VMworld is proof that admins are arming themselves with knowledge -- the conference has grown at a tremendous rate over the last few years. But that's VMware and virtualization, and perhaps you believe you have a handle on that already. So what about the cloud? Are you there yet? Need more information? It sounds like that's where CloudCamp comes in... though I get flashbacks to the 80's and SpaceCamp references when I think about the name. ;)"

I'd like to thank our CloudCamp co-founders Dave Nielsen (CloudCamp Manager), Jesse Silver (Sun), Sam Charrington (Appistry), Sara Dornsife (Sun) for all their hard work over the last 6 months. With events planned on 5 continents, now is the time to get involved and start your very own local event. Get in touch and we'll help you get moving. We've also setup a mailing list if you'd like to keep up to date.

Some Upcoming CloudCamps

Friday, January 16, 2009

The Cloud Economy and the Million-Dollar Private Cloud

In the article, Coleman claims that "Large corporations may have tens of thousands of servers. Coleman says one customer’s deployment rang up, before discounts, at $50 million." I think the more telling question is how much was it after discounts?

I'm having hard time believing that any company regardless of how big they are in "todays" economy is shelling out anything close to 50million dollars for private cloud management software. From the conversations I'm having, the big trend is not in create a wholly encapsulated private clouds, but in the creation of the so-called hybrid clouds that span multiple existing data centers and remote cloud providers. Some of the biggest projects I've heard of have been in the range of 2 -3 million for software related expenses. The notable exceptions are companies such as Rackspace, AT&T and a few other "telecoms" who have reportedly been spending ten's of millions on their new data centers & cloud related projects.

I find Rackspaces strategy of acquisition to be particularly smart. Why spend months building, when you can acquire the emerging leaders for cheap. Such as their recent purchase of SliceHost. Although slicehost isn't traditionally a cloud provider, with a little massaging of the slicehost offering they could easily grab nice piece of the overall cloud hosting market.

Back to the question of Million-Dollar Private Clouds, from what I can tell most of this money has been allotted to the traditional hard costs such as hardware and real estate. Less then 5% is typically allotted to software. Will there be 50million dollar private cloud deployments, some day probably, but I'm not sure if we'll see this anytime soon.

In the short term the biggest opportunity may be in the customized cloud providers, one great recent example is AMD's Fusion Cloud. AMD's press release describes Fusion Cloud as an on-demand cloud which provides access to over 1000 ATI graphics cards work in tandem with AMD processors and chipsets to render and compress graphically intensive content, which is then streamed over the internet to all sorts of devices, eliminating the need for computational effort on the receiving end. The general idea is Internet-connected devices -- from cell phones to laptops - the so called "three screens"- would be able to play games and HD movies without draining much of their battery life or even requiring potent hardware because most of the heavy lifting is done offsite in the cloud. This also fits well into Microsofts "Software + Services" strategy. A question I had in AMD's announcement, was what software they are using to enable their cloud infrastructure, and how much did they spend? At 1000+ CPU's I would estimate in 500k-1m dollar range.

Unlike Coleman, I do feel the opportunity this year is in enabling the ability for companies who have existing data centers a way to create fast, easy, and cheap cloud infrastructures, not the opposite.

Tuesday, January 13, 2009

Mountain View Cloud Computing Interoperability Event (Jan 20th)

As some of you may have heard, we (CloudCamp and Cloud Computing Interoperability Forum) are working with Stephen O'Grady of Redmonk and David Berlind of TechWeb to host a Cloud Computing interoperability event in Mountain View, CA on Jan 20th from 10am-3pm, the day before CloudConnect (and unfortunately, the same day as CloudCamp Atlanta). Like previous CCIF events, the purpose is to bring together like minds to discuss issues surrounding Cloud Computing Interoperability. Those of you who found the CCIF event in September useful should attend this event.

The goal of the meeting is for everyone to continue the discussion, identify and compare customer needs to existing assets and technologies and to pragmatically work towards better cloud interoperability. The 1/2 day event will include an opportunity for groups to break out for more focused discussions.

Cloud computing solution providers both big and small are encouraged to join in this exploration of how the industry can pull together to establish better interoperability that ultimately lower the barrier to the adoption of cloud computing, resulting in a rising tide for all.

Also, while this event remains open, we have limited space. So we are limiting attendance to no more than two persons per organization, and to only those organizations who are actively engaged in interoperability issues. Please do not register if your company does not fall into this category.

- To pre-register, visit: http://interoperability-event-

09.eventbrite.com/ - someone will follow-up with an email to confirm your registration

- if you have any questions, contact Dave Nielsen (dave@platformd.com)

IMPORTANT: Your registration is not complete until you receive a registration confirmation.

----

Wall Street Cloud Interoperability Forum

I'd like to also note we're putting on a Wall Street Cloud Interoperability Forum this march and are looking for sponsors and organizers. Please feel free to get involved at http://groups.google.com/group/cloudforum

---

Sunday, January 11, 2009

Time for a Amazon Partner Program & Product Roadmap

Are you kidding me? Amazon Web Services is a company that bases their business on others who build their own businesses on Amazon's Infrastructure, yet doesn't have partner program? AWS appears to have a complete lack of interest for the ongoing success of their bread butter developer eco-system. It is a joke to say they give "time to shift". Shift to what? A business model that doesn't compete with AWS directly?

I don't mean to pick on Amazon, they've done more for moving the cloud communuty forward then just about anyone. But come on, it's been more then 7 years since Amazon launched Amazon Web Services yet they still don't have a partner program in place. Is the reason because they don't believe they need any? This seems completely contrariety for an application service provider who focuses primarily on others who develop applications on their services.

Thnk about it, AWS focuses on software developers, so why doesn't Amazon publish a product road map? I think the answer is clear, they're iterating based on what works for their ecosystem. A kind of massive market research tool. Why publish a roadmap that tells the world your plans, thus limiting any potential for users to try out potential future "AWS" revenue avenues? Sure some may point to their super secret "technical advisory board" only available for their top customers and contributors. An advisory board who's first rule is "it doesn't exist". At the end of the day, AWS can't just give notice that they're going to compete with you, they need to give more transparency by publishing a roadmap at least 6 months out. 300,000+ users need to demand this.

It's time for AWS to get a partner program in place and get a clear product roadmap. Enough said.

Saturday, January 10, 2009

The Environmental Impact of Cloud Computing

In a recent blog post I also looked into the concept of "Carbon Friendly Cloud Computing". More recently a new term has emerged to describe a programmatic ability to monitor, manage and control your carbon footprint which has been referred to as Carbon Information Management. So I had a crazy idea, why not combine both the ability to monitor your energy consumption levels (aka green computing), with the ability to manage your carbon levels combined with the ability to manage your cloud infrastructure. We'll I guess I wasn't the only one with this idea.

Wissner-Gross has setup a site at co2stats.com to help you do just this thing. According to the site, CO2stats provides a service that automatically calculates your website's total energy consumption, helps to make it more energy efficient, and then purchases audited renewable energy from wind and solar farms to neutralize its carbon footprint.

Digging a little deeper I found that basically they offer a software suite that automatically monitors your site's energy usage, gives you tips on how to make your site more energy-efficient, and purchases the appropriate amount of audited renewable energy from wind and solar farms. The site goes on to describe the service like hacker proof software used by many websites to secure online payments, CO2Stats software continuously scans your website so that it can monitor your site's energy usage each time someone visits your site. CO2Stats is very smart, and is able to capture a large amount of data about your site's total energy consumption.

CO2Stats claims you can use any web hosting provider you want and says "Green web hosts only make the servers on which your files sit green, and most don't even provide proof of that. CO2Stats is a turnkey solution for making your entire website experience green, from your visitors computers to the servers and the networks that connect them, without needing to switch web hosts".

A very cool concept indeed and worth a look, that is if you care about your cloud's environmental impact.

Friday, January 9, 2009

Amazon Crushes Ecosystem - Launches AWS Management Console

The RightScale blog emphasizes that its cloud-enablement business is not based on Amazon alone. The post goes on to say; “RightScale manages clouds, that’s clouds in plural. It’s become a multi-cloud world - and that’s good for customers and therefore the market itself. We intend to continue RightScale’s role as a neutral provider of multi-cloud management support and portability in order to promote an enabling platform for both customers and ISVs - even as we continue to be a close partner of Amazon.”

The post paints fairly optimistic picture of a multi-cloud world, one that may exist at some point, but not today. (This has been a main driver of our Cloud Interoperability efforts which RightScale has also been involved with) But lets be straight, for most part, all EC2 users really require is a quick and easy web based dashboard, all those extra features that EC2 management providers like RightScale, Ylastic and AWSManager offer aren't used until later, much later, if at all. What's more, most new cloud services coming online, have their own graphic user dashboards adding competitive pressures (Look at GoGrid, Flexiscale and ElasticHosts).

What worries me about this announcement is it would appear that cloud providers may in a sense start using their ecosystem as a kind of market research tool to help determine what features and opportunities they will address next. My hope is that companies like Amazon may start acquiring the more promising cloud ecosystem partners rather then creating their own alternatives and crushing their "partners" in the process. AWS has gained the level of success because of its active commercial ecosystem and should take steps to embrace it, not kill it.

I think the folks at Rightscale are correct in their view of the larger opportunity for a meta-cloud dashboard, one that enables the management of multiple cloud providers. I feel this will certainly become a critical point for any of these EC2 management consoles to continue as viable businesses, but until there is some sort of standard management API, this muti-cloud future is some what of a moving target.

Paul Lancaster Business Development Manager at GoGrid said it well,

"Better opportunities for other cloud vendors as AWS console de-values partners who build business on the platform. Good news for the competition."

--

Previous coverage.

Cloud Oriented Infrastructure

I think Anne is missing the point and quite possibly living in some kind of parallel universe. I wouldn't say SOA is dead, but rather has become ubiquitous. One can define a service-oriented architecture (SOA) as a group of services / applications that communicate with each other. The process of communication involves either simple data-passing or two or more services coordinating some activity. Intercommunication implies the need for some means of connecting services to each other. Most modern Internet enabled applications at this point have some sort of web services component. Even basic http / https at this point could be consider a web services. What SOA has done a great job of is bringing the core ideas found within Service-orientation to the broader technology industry. The goals of loose coupling of web enabled services with operating systems, programming languages and other technologies which underlie applications have become a mainstream concept. If by dead, she means not used, then she is way off base.

In the near term the bigger opportunity may be in the area of cloud infrastructure enablement. That is taking existing data center infrastructure, or what some are referring to as a hybrid data center and making them internet aware. The first steps toward this cloud infrastructure have already been set in motion with the work being done in so-called, Service Oriented Infrastructures (SOI), which may also be described as virtualised IT Infrastructure comprised of components that are managed in an industrialised / automated way. (Think the model-t assembly line applied to your data center and it applications)

The next generation of service oriented Infrastructures will soon start to look more like what I have started calling "Cloud Oriented Infrastructures". These are infrastructures that can adapt to capacity demands and constraints using the same web services building blocks that have made both SOA and SOI so popular with in modern enterprise IT environments. The bigger opportunity for Cloud Oriented Infrastructures will be in applying the flexibly of an ever changing and adaptive approach to computing capacity management. In the same way that cloud computing has given applications the ability to adapt, we now have the ability to allow our underlying infrastructures to autonomously adapt using a mixture of local and remote (cloud) infrastructure.

Looking ahead, I think you will start see cloud aware infrstructure deployments and related tools start to be much more commonly used. What is interesting is building a private compute cloud may soon start to more about augmenting your data center, rather then replacing what you already have in place. I for one will be looking at those who look to improve what I have rather then trying to convince me to reinvent it.

Welcome to the world Sammy Cohen

|

| From Sammy Cohen Birthday |

After 3 days or 62 hours of early-labour, (poor Brenny) and a short 3 and half for the rest, he came into this world one fist above his head like the little superman he is. Weighing in at 7 pounds, 15 ounces, he's a good size with long fingers and toes, a decent amount of light brown hair and eyes that have yet to choose a colour.

What was interesting about the whole experience was that after 62+ hours of contractions, Sammy decided to make his transition to actually labour rather quickly. So quickly that by the time Brenda and I clued into the fact we should probably think about going to the Hospital, it already was too late. Lucky our midwife was with us, so little Sammy was born, yup, at home. I can now officially add mid-wife, or mid-husband to to my resume and linkedin profile. I will say I learned a lot more about birth then I had ever expected, but am very happy every worked out so well.

The last few days have been crazy, but I couldn't have asked for anything more. I just registered www.sammy-cohen.com this morning, Now the hard work begins, teaching my little guy how to program in python. (Just kidding)

If you're one of those people, that like to look at other peoples baby pictures, feel free here.

Wednesday, January 7, 2009

Sun Microsystems Acquirers Q-layer

The Q-layer technology simplifies cloud management and allows users to quickly provision and deploy applications, a key component in Sun’s strategy to enable building public and private clouds. As businesses continue to rely more on technology to drive mission-critical processes, the agility of the datacenter determines the flexibility of entire organizations. The Q-layer software supports instant provisioning of services such as servers, storage, bandwidth and applications, enabling users to scale their own environments to meet their specific business requirements.

“Sun's open, network-centric approach coupled with optimized systems, software and services provides the critical building blocks for private and public cloud offerings,” said David Douglas, senior vice president of Cloud Computing and chief sustainability officer, Sun Microsystems. “Q-layer's technology and expertise will enhance Sun’s offerings, simplifying cloud management and speeding application deployment.”

Cloud computing brings compute and data resources onto the Web and offers higher efficiency, massive scalability and faster and easier software development. Sun is an ideal advisor and partner for companies that want to build cloud computing facilities within their organizations, and for companies and service providers that want to build publicly available cloud computing services, with the open technology, expertise and vision to help companies build, run and use their own clouds. For more information on Sun’s cloud computing strategy, please visit: http://sun.com/cloud.

The terms of the deal with Q-layer were not disclosed as the transaction is not material to Sun.

Friday, January 2, 2009

Fear, uncertainty and doubt in 2009

I thought I'd take a more pragmatic view of 2009. So let's look at the obvious aspects of our world as we enter 2009. Financial crisis, food crisis, global climate change crisis, terrorists, mideast crisis, and the list goes on. There appears to be a particular focus at the present time on any concepts involving a crisis. In Marxist theory, a crisis refers to the sharp transition to a recession. Which I think we can all agree is what happened in 2008. For me a crisis represents a crucial stage or turning point in the course of something much bigger. Which brings the question of what comes next?

So what does all this doom and gloom mean? I think it means that for those who aren't spending their time spreading fear, uncertainty and doubt, 2009 would appear to be the perfect time to hunker down and build, build and build. Your products, your team, your company.

In my conversation with Vijay Manwani founder of Bladelogic, he said that one of the best things to happen to Bladelogic was closing their first round of funding just before Sept 11 2001. He said the downturn meant they could recruit some the best people, with little or no competition from other startups. In effect the down turn provided a economic void that enabled them to emerge at the other end far more successfully then if they had formed during the peak of a economic cycle.

A recent article in wired magazine titled "How Economic Turmoil Breeds Innovation" also sheds some light on this subject. The article outlines; "The most memorable crucible in modern history is the Great Depression. During that era, several firms made huge bets that changed their fortunes and those of the country: Du Pont told one of its star scientists, Wallace Carothers, to set aside basic research and pursue potentially profitable innovation. What he came up with was nylon, the first synthetic fabric, revolutionizing the way Americans parachuted, carpeted, and panty-hosed. As IBM's rivals cut R&D, founder Thomas Watson built a new research center. Douglas Aircraft debuted the DC-3, which within four years was carrying 90 percent of commercial airline passengers. A slew of competing inventors created television."

So who knows, maybe 2009 is the year we see the next IBM or Google emerge?